Five questions for Richard Weir

Richard Weir was born in Dublin, but at age 9, he knew he would come to the United States. He had seen Americans walking on the moon and thought everything about the United States was great, and so, he said, it was never a matter of if he would live in the States, but when.

While attending Trinity College in Dublin, he decided biomedical engineering was his calling: He loved using his hands, making and building things. He began to research U.S. universities and chose to attend Northwestern University in Chicago where he earned his master’s degree and Ph.D., and later headed a lab at the Rehabilitation Institute of Chicago.

Because he and his wife didn’t want their son to grow up in a big city, the family moved west to Boulder in 2008. Weir commuted to and from Chicago for a time, then interviewed for a position at the University of Colorado Boulder.

“During the interview, they kept talking about this Anschutz place. I had no idea what it was, but the Board of Regents had just approved the new bioengineering department there,” he said. In January of 2011, he joined the department. And he’s still doing what he loves most – building things.

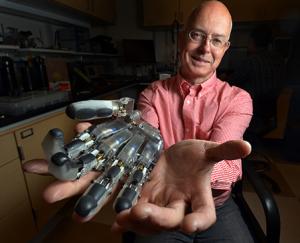

In his office on the CU Anschutz Medical Campus, you’ll find a Lego Darth Vader and a G.I. Joe, not necessarily because he’s a toy fan, but because he finds inspiration in “weird and wonderful places” -- in this case, in the construction of the figures’ hands. Much of his research focuses on creating a prosthetic hand that moves realistically and senses touch, and he has made groundbreaking discoveries in those areas. But making devices that actually work for users with high levels of amputation is just as important to him as developing his “blue sky” robotic appendages.

“I’ve always been intrigued by sci-fi and robots and space ships, my father was a professor of medicine at Trinity College, and I’ve always been someone who makes things. My friends tell me that the real reason, the deep psychological root of it all, is that I have a twin sister who lost a hand when she was 5. So my career is a kind of marriage of all of those things.”

He holds the title of research associate professor and also is director of the Veterans Administration Biomechatronics Development Laboratory, is a research healthcare scientist with the VA Eastern Colorado Healthcare System, an associate research professor with the Department of Rehabilitation Medicine at Anschutz, and an adjunct professor with the Department of Integrative Physiology at CU-Boulder.

At home, he continues to build – tables, sideboards and other furniture – and because his now-grown son likes horses, fences and sheds and all the items needed for the care of the animals.

1. What does your research on prosthetics entail and what are some of your successes?

Our laboratory’s goal is to ultimately be able to develop a fully articulated hand that someone who has lost a limb could don and be able to control like a natural hand but that would also have a sense of touch. That’s the ultimate goal of our research, but our research basically is focused on the whole problem of replacements for persons who have lost hands and arms. It turns out to be quite a challenging problem.

We focus on a variety of ways to do this. First, the physical hand. That is where robotics comes in, but there are many hands that are actually useful that aren’t fully articulated robot hands. These can be as simple as prosthetic hooks and grippers. So we aren’t just aiming at the blue sky, high-flying stuff, we also work toward more practical solutions that have a more immediate application to users.

Some of the hands we’ve worked on include one for DARPA (Defense Advanced Research Projects Agency). The hand has an 18-degree range of freedom with motors and each motor has its own microprocessors, and each finger has its own touch sensor that includes another microprocessor for a total of 18 microprocessors. More recently, we’ve been working on a 3-D, metal-printed, purely mechanical finger that will articulate when someone manually bends the finger and that will probably be more useful in the short term than our fancy robot hand for DARPA. There are some 40,000 individuals in the U.S. with limb amputation above the wrist, but there are 400,000 individuals in the U.S. who have lost a finger. And there are many full-hand prosthetic options available, but the prosthetic options for people with finger loss are far more limited.

We have some fancy 3-D printers we got from the Veterans Administration through an equipment grant and that’s enabled us to think more creatively about how to better fabricate the fingers and components used in the hands. We have a metal printer, which is rare, and it allows us to fabricate designs in anthropomorphic shapes not rectilinear shapes.

Our work on components includes wrist flexion units, humeral rotators, and shoulder joints. Joints that aren’t commonly available prosthetically because the market is too small, but because we are a research-funded entity, we can pursue some of these not-so-profitable component projects. So we can work on shoulder joints, for instance. Shoulder joints, oddly enough, don’t exist because there are so few people who have lost an arm at the shoulder. That’s a good thing really, but for those who have lost an arm at the shoulder, it’s a very challenging fitting because there aren’t many options.

2. Once these devices are built, do you also develop the means of controlling them?

That’s where we get involved in the area of neural interfaces, where we are trying to figure out ways to communicate with the nervous system or the muscles in the residual limb. We’ve been developing something called IMES or implantable myoelectric sensors for more than 10 years. We were able to implant IMES in human subjects for the first time a year or so ago under a clinical trial led by the Alfred Mann Foundation at Walter Reed Hospital. We developed these small IMES sensors that are entirely self-contained – about 12 mm long by 2 mm in diameter. These little tube-like things are inserted inside the residual muscles of the amputated limb then we place a coil around the arm in the prosthetic socket so we can talk to implants in the body without having wires pass through the skin. It’s very exciting because this allows us to pick up signals from many more muscles in the arm than you can otherwise do on the surface.

The current standard of care would be two surface electrodes, but the signal on the surface of the skin is kind of an amalgam of everything below it, so you can’t really have more than one or two signals on the surface without getting cross-talk and confusion. But if you go right into the muscle, you can pick up individual muscle signals. We send them out to a prosthesis controller that then drives the joints in the hand. What we’ve been able to do at Walter Reed is have an individual with these implants actually drive three or four degrees of freedom simultaneously. It’s something we take for granted, the ability to extend our wrist, open our hand, and move the thumb to the side all in one fluid motion. If you are using the current level of prosthetics, you have to do each one of those actions individually. But the implants allow the prosthesis user to do this full, fluid motion all in parallel, so it has been a powerful demonstration of these ideas.

The other thing that has become apparent over the years of doing this research is that if we wish to build a hand that can operate as well as your or my hand, you need a sense of touch. You can’t do dexterous manipulation without it. Gripping to pick up something like a cup is very simple: you just open and close your fingers around it. But manipulating objects in your hands requires many joints under active control and also requires the ability to sense when you have made contact with an object. There has been a lot of research on how to simulate or measure touch – through vibration, for instance – but we’ve concluded that the best way is to talk to the sensory nerves. We’ve been trying to figure out ways to communicate with neurons in the nerve that doesn’t involve basically impaling them with needles, which is the current approach. Now a silicon needle-type of electrode is stuck into the nerve tissue and you hope it hits a neuron so you can read the signals.

We want to send a signal into your neurons and stimulate the neuron so it can tell the brain you are touching something. We think we can do that using an optical solution and we’ve been collaborating with a number of researchers on the Anschutz and Boulder campuses to develop an approach that will allow us to use an optical or very small multiphoton microscope inside the limb. It essentially will shine light on an optical protein inside the neuron. When the light is at the right frequency and focused on the correct neuron, you can cause the neuron to fire an action potential train and give a signal to the user that says, “Yep, I’ve got sensation.”

3. Can your research be applied to other medical issues, for instance, paralysis?

Yes. The Holy Grail of many, many research projects and efforts at the moment is to find a good, stable neural interface that can measure the signals coming down the motor nerve and stimulate the sensory nerves so a person thinks they are having the sense of touch. If you have a stable nerve interface, you have a way to communicate with the nervous system and you can do all sorts of very powerful things. For someone with a spinal cord injury, you could bridge the break and talk to neurons in the nervous system. You could also use it to interface with the parasympathetic nervous system to modulate the control of the pancreas or the liver or the vagus nerve – a big nerve that goes up in the brain. You could talk to the optical nerve or talk to the auditory nerve and modulate vision or hearing.

We believe that if we can get this to work properly, it would have far greater impact than just our humble interface for controlling prosthetic hands.

4. In what ways have you worked to improve the prosthetic user’s experience?

Many people live with chronic pain and we would like to address that issue through an appropriate use of electrical stimulation. So we are about to explore the use of stimulation to manage pain, which would be a very important clinical application for many people.

Socket comfort also is important. A prosthesis is made up of a socket from which all of the hardware components are suspended and is also that place that a person slips their residual limb into. Socket comfort is a very big issue for most users because a socket will get hot and sweaty in the summer and cold in winter. We’re trying to figure out ways to make the socket suspend comfortably so that it doesn’t impinge on pressure points, but also to make sure it stays cool and sweat-free.

We build all these fancy robotic hands, but the things that actually decide whether a user will use the prosthesis are: “Do I have a lot of pain?” “Is my socket comfortable?” “Is the arm too heavy?” It doesn’t matter how fancy your system is; these three things will determine whether the system gets used.

There’s also something we’re working on called algorithm development that maps the sensing from the interface and decides which motors in the arm to drive. We’ve been developing a novel algorithm to improve the control that is available to many users. We’ve been recruiting people with amputations to participate in studies to see whether this controller design is effective.

5. What would you consider the high points in your career or personal life?

The birth of my son was one of my high points and my marriage is another. Getting our DARPA hand working is another high point. We were able to deliver a very challenging hand design in time for the 50th anniversary DARPA celebration. We were working in the hotel room the night before to try it out for the first time on a patient. The tester came in and had never seen the hand and we had to fit it right there.

Getting IMES into humans has to be a high point. That’s only taken 10 years. Research is one step forward and 27 back.

More recently, we are finally getting results from our optogenetic research. After a long and hard-fought struggle to get everything working we’ve gotten some results, so it’s been a huge relief and big deal. There was never a concrete disproving of the idea; it was always another technical hurdle or something to be tested or disproved before we could throw an idea out. Now we have data that is interesting and it’s exciting.